mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-12-12 10:55:17 +00:00

Compare commits

18 commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

bf5da9a9fb | ||

|

|

ede3f82143 | ||

|

|

5ec92b3535 | ||

|

|

4a67e23eab | ||

|

|

3ce4780e38 | ||

|

|

edd9ef9d4f | ||

|

|

e661147a1d | ||

|

|

d8dcc3ca34 | ||

|

|

f7a4f3fc8b | ||

|

|

0bbad14c70 | ||

|

|

4c5d3d6a6e | ||

|

|

a98eced418 | ||

|

|

6d22618756 | ||

|

|

8dbc53271e | ||

|

|

79e0ff03fa | ||

|

|

bf1cc50ece | ||

|

|

c3bc789cd3 | ||

|

|

9252cb88d8 |

14 changed files with 334 additions and 490 deletions

424

README.md

424

README.md

|

|

@ -1,416 +1,36 @@

|

||||||

<div align="center">

|

# 🧠 PR Agent LEGACY STATUS (open source)

|

||||||

|

Originally created and open-sourced by Qodo - the team behind next-generation AI Code Review.

|

||||||

|

|

||||||

<div align="center">

|

## 🚀 About

|

||||||

|

PR Agent was the first AI assistant for pull requests, built by Qodo, and contributed to the open-source community.

|

||||||

|

It represents the first generation of intelligent code review - the project that started Qodo’s journey toward fully AI-driven development, Code Review.

|

||||||

|

If you enjoy this project, you’ll love the next-level PR Agent - Qodo free tier version, which is faster, smarter, and built for today’s workflows.

|

||||||

|

|

||||||

<picture>

|

🚀 Qodo includes a free user trial, 250 tokens, bonus tokens for active contributors, and 50% more advanced features than this open-source version.

|

||||||

<source media="(prefers-color-scheme: dark)" srcset="https://www.qodo.ai/wp-content/uploads/2025/02/PR-Agent-Purple-2.png">

|

|

||||||

<source media="(prefers-color-scheme: light)" srcset="https://www.qodo.ai/wp-content/uploads/2025/02/PR-Agent-Purple-2.png">

|

|

||||||

<img src="https://codium.ai/images/pr_agent/logo-light.png" alt="logo" width="330">

|

|

||||||

|

|

||||||

</picture>

|

If you have an open-source project, you can get the Qodo paid version for free for your project, powered by Google Gemini 2.5 Pro – [https://www.qodo.ai/solutions/open-source/](https://www.qodo.ai/solutions/open-source/)

|

||||||

<br/>

|

|

||||||

|

|

||||||

[Installation Guide](https://qodo-merge-docs.qodo.ai/installation/) |

|

|

||||||

[Usage Guide](https://qodo-merge-docs.qodo.ai/usage-guide/) |

|

|

||||||

[Tools Guide](https://qodo-merge-docs.qodo.ai/tools/) |

|

|

||||||

[Qodo Merge](https://qodo-merge-docs.qodo.ai/overview/pr_agent_pro/) 💎

|

|

||||||

|

|

||||||

## Open-Source AI-Powered Code Review Tool

|

|

||||||

|

|

||||||

**PR-Agent** is an open-source, AI-powered code review agent. It is the legacy project from which Qodo Merge 💎, a separate commercial product, originated. PR-Agent is maintained by the community as a gift to the community. We are looking for additional maintainers to help shape its future; please contact us if you are interested.

|

|

||||||

|

|

||||||

**[Qodo Merge](https://qodo-merge-docs.qodo.ai/overview/pr_agent_pro/) 💎** is a separate, enterprise-grade product with its own distinct features, zero-setup, and priority support.

|

|

||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

### Quick Start Options

|

## ✨ Advanced Features in Qodo

|

||||||

|

|

||||||

| **Option** | **Best For** | **Setup Time** | **Cost** |

|

### 🧭 PR → Ticket Automation

|

||||||

|------------|--------------|----------------|----------|

|

Seamlessly links pull requests to your project tracking system for end-to-end visibility.

|

||||||

| **[PR-Agent (Open Source)](#-quick-start-for-pr-agent-open-source)** | Developers who want full control, self-hosting, or custom integrations | 5-15 minutes | Free |

|

|

||||||

| **[Qodo Merge](#-try-qodo-merge-zero-setup)** | Teams wanting zero-setup, enhancing the open-source features, additional enterprise features, and managed hosting | 2 minutes | Free tier available |

|

|

||||||

</div>

|

|

||||||

|

|

||||||

[](https://chromewebstore.google.com/detail/qodo-merge-ai-powered-cod/ephlnjeghhogofkifjloamocljapahnl)

|

### ✅ Auto Best Practices

|

||||||

[](https://github.com/apps/qodo-merge-pro/)

|

Learns your team’s standards and automatically enforces them during code reviews.

|

||||||

[](https://github.com/apps/qodo-merge-pro-for-open-source/)

|

|

||||||

[](https://discord.com/invite/SgSxuQ65GF)

|

|

||||||

<a href="https://github.com/Codium-ai/pr-agent/commits/main">

|

|

||||||

<img alt="GitHub" src="https://img.shields.io/github/last-commit/Codium-ai/pr-agent/main?style=for-the-badge" height="20">

|

|

||||||

</a>

|

|

||||||

</div>

|

|

||||||

|

|

||||||

## Table of Contents

|

### 🧪 Code Validation

|

||||||

|

Performs advanced static and semantic analysis to catch issues before merge.

|

||||||

|

|

||||||

- [PR-Agent vs Qodo Merge](#pr-agent-vs-qodo-merge)

|

### 💬 PR Chat Interface

|

||||||

- [Getting Started](#getting-started)

|

Lets you converse with your PR to explain, summarize, or suggest improvements instantly.

|

||||||

- [Why Use PR-Agent?](#why-use-pr-agent)

|

|

||||||

- [Features](#features)

|

|

||||||

- [See It in Action](#see-it-in-action)

|

|

||||||

- [Try It Now](#try-it-now)

|

|

||||||

- [Qodo Merge 💎](#qodo-merge-)

|

|

||||||

- [How It Works](#how-it-works)

|

|

||||||

- [Data Privacy](#data-privacy)

|

|

||||||

- [Contributing](#contributing)

|

|

||||||

- [Links](#links)

|

|

||||||

|

|

||||||

## PR-Agent vs Qodo Merge

|

### 🔍 Impact Evaluation

|

||||||

|

Analyzes the business and technical effect of each change before approval.

|

||||||

PR-Agent and Qodo Merge are now two completely different products that share a common history. PR-Agent is the original, legacy open-source project. Qodo Merge was initially built upon PR-Agent but has since evolved into a distinct product with a different feature set.

|

|

||||||

|

|

||||||

### PR-Agent (This Repository) - Open Source

|

|

||||||

|

|

||||||

✅ **What you get:**

|

|

||||||

- Complete source code access and customization

|

|

||||||

- Self-hosted deployment options

|

|

||||||

- Core AI review tools (`/describe`, `/review`, `/improve`, `/ask`)

|

|

||||||

- Support for GitHub, GitLab, BitBucket, Azure DevOps

|

|

||||||

- CLI usage for local development

|

|

||||||

- Free forever

|

|

||||||

|

|

||||||

⚙️ **What you need to manage:**

|

|

||||||

- Your own API keys (OpenAI, Claude, etc.)

|

|

||||||

- Infrastructure and hosting

|

|

||||||

- Updates and maintenance

|

|

||||||

- Configuration management

|

|

||||||

|

|

||||||

### Qodo Merge - A Separate Product

|

|

||||||

|

|

||||||

✅ **What you get (everything above plus):**

|

|

||||||

- Zero-setup installation (2-minute GitHub app install)

|

|

||||||

- Managed infrastructure and automatic updates

|

|

||||||

- Advanced features: CI feedback, code suggestions tracking, compliance (rules), custom prompts, and more

|

|

||||||

- Priority support and feature requests

|

|

||||||

- Enhanced privacy with zero data retention

|

|

||||||

- Free tier: 75 PR reviews/month per organization

|

|

||||||

|

|

||||||

💰 **Pricing:**

|

|

||||||

- Free tier available

|

|

||||||

- Paid plans for unlimited usage

|

|

||||||

- [View pricing details](https://www.qodo.ai/pricing/)

|

|

||||||

|

|

||||||

**👨💻 Developer Recommendation:** Start with PR-Agent if you want to experiment, customize heavily, or have specific self-hosting requirements. Choose Qodo Merge if you want to focus on coding rather than tool maintenance.

|

|

||||||

|

|

||||||

## Getting Started

|

|

||||||

|

|

||||||

### 🚀 Quick Start for PR-Agent (Open Source)

|

|

||||||

|

|

||||||

#### 1. Try it Instantly (No Setup)

|

|

||||||

Test PR-Agent on any public GitHub repository by commenting `@CodiumAI-Agent /improve`

|

|

||||||

|

|

||||||

#### 2. GitHub Action (Recommended)

|

|

||||||

Add automated PR reviews to your repository with a simple workflow file:

|

|

||||||

```yaml

|

|

||||||

# .github/workflows/pr-agent.yml

|

|

||||||

name: PR Agent

|

|

||||||

on:

|

|

||||||

pull_request:

|

|

||||||

types: [opened, synchronize]

|

|

||||||

jobs:

|

|

||||||

pr_agent_job:

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- name: PR Agent action step

|

|

||||||

uses: Codium-ai/pr-agent@main

|

|

||||||

env:

|

|

||||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

|

||||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

|

||||||

```

|

|

||||||

[Full GitHub Action setup guide](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-action)

|

|

||||||

|

|

||||||

#### 3. CLI Usage (Local Development)

|

|

||||||

Run PR-Agent locally on your repository:

|

|

||||||

```bash

|

|

||||||

pip install pr-agent

|

|

||||||

export OPENAI_KEY=your_key_here

|

|

||||||

pr-agent --pr_url https://github.com/owner/repo/pull/123 review

|

|

||||||

```

|

|

||||||

[Complete CLI setup guide](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#local-repo-cli)

|

|

||||||

|

|

||||||

#### 4. Other Platforms

|

|

||||||

- [GitLab webhook setup](https://qodo-merge-docs.qodo.ai/installation/gitlab/)

|

|

||||||

- [BitBucket app installation](https://qodo-merge-docs.qodo.ai/installation/bitbucket/)

|

|

||||||

- [Azure DevOps setup](https://qodo-merge-docs.qodo.ai/installation/azure/)

|

|

||||||

|

|

||||||

### 💎 Try Qodo Merge (Zero Setup)

|

|

||||||

|

|

||||||

If you prefer a hosted solution without managing infrastructure:

|

|

||||||

|

|

||||||

1. **[Install Qodo Merge GitHub App](https://github.com/marketplace/qodo-merge-pro)** (2 minutes)

|

|

||||||

2. **[FREE for Open Source](https://github.com/marketplace/qodo-merge-pro-for-open-source)**: Full features, zero cost for public repos

|

|

||||||

3. **Free Tier**: 75 PR reviews/month for private repos

|

|

||||||

4. **[View Plans & Pricing](https://www.qodo.ai/pricing/)**

|

|

||||||

|

|

||||||

[Complete Qodo Merge setup guide](https://qodo-merge-docs.qodo.ai/installation/qodo_merge/)

|

|

||||||

|

|

||||||

### 💻 Local IDE Integration

|

|

||||||

Receive automatic feedback in your IDE after each commit: [Qodo Merge post-commit agent](https://github.com/qodo-ai/agents/tree/main/agents/qodo-merge-post-commit)

|

|

||||||

|

|

||||||

|

|

||||||

[//]: # (## News and Updates)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (## Aug 8, 2025)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (Added full support for GPT-5 models. View the [benchmark results](https://qodo-merge-docs.qodo.ai/pr_benchmark/#pr-benchmark-results) for details on the performance of GPT-5 models in PR-Agent.)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (## Jul 17, 2025)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (Introducing `/compliance`, a new Qodo Merge 💎 tool that runs comprehensive checks for security, ticket requirements, codebase duplication, and custom organizational rules. )

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (<img width="384" alt="compliance-image" src="https://codium.ai/images/pr_agent/compliance_partial.png"/>)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (Read more about it [here](https://qodo-merge-docs.qodo.ai/tools/compliance/))

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (## Jul 1, 2025)

|

|

||||||

|

|

||||||

[//]: # (You can now receive automatic feedback from Qodo Merge in your local IDE after each commit. Read more about it [here](https://github.com/qodo-ai/agents/tree/main/agents/qodo-merge-post-commit).)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (## Jun 21, 2025)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (v0.30 was [released](https://github.com/qodo-ai/pr-agent/releases))

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (## Jun 3, 2025)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (Qodo Merge now offers a simplified free tier 💎.)

|

|

||||||

|

|

||||||

[//]: # (Organizations can use Qodo Merge at no cost, with a [monthly limit](https://qodo-merge-docs.qodo.ai/installation/qodo_merge/#cloud-users) of 75 PR reviews per organization.)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (## Apr 30, 2025)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (A new feature is now available in the `/improve` tool for Qodo Merge 💎 - Chat on code suggestions.)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (<img width="512" alt="image" src="https://codium.ai/images/pr_agent/improve_chat_on_code_suggestions_ask.png" />)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (Read more about it [here](https://qodo-merge-docs.qodo.ai/tools/improve/#chat-on-code-suggestions).)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (## Apr 16, 2025)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (New tool for Qodo Merge 💎 - `/scan_repo_discussions`.)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (<img width="635" alt="image" src="https://codium.ai/images/pr_agent/scan_repo_discussions_2.png" />)

|

|

||||||

|

|

||||||

[//]: # ()

|

|

||||||

[//]: # (Read more about it [here](https://qodo-merge-docs.qodo.ai/tools/scan_repo_discussions/).)

|

|

||||||

|

|

||||||

## Why Use PR-Agent?

|

|

||||||

|

|

||||||

### 🎯 Built for Real Development Teams

|

|

||||||

|

|

||||||

**Fast & Affordable**: Each tool (`/review`, `/improve`, `/ask`) uses a single LLM call (~30 seconds, low cost)

|

|

||||||

|

|

||||||

**Handles Any PR Size**: Our [PR Compression strategy](https://qodo-merge-docs.qodo.ai/core-abilities/#pr-compression-strategy) effectively processes both small and large PRs

|

|

||||||

|

|

||||||

**Highly Customizable**: JSON-based prompting allows easy customization of review categories and behavior via [configuration files](pr_agent/settings/configuration.toml)

|

|

||||||

|

|

||||||

**Platform Agnostic**:

|

|

||||||

- **Git Providers**: GitHub, GitLab, BitBucket, Azure DevOps, Gitea

|

|

||||||

- **Deployment**: CLI, GitHub Actions, Docker, self-hosted, webhooks

|

|

||||||

- **AI Models**: OpenAI GPT, Claude, Deepseek, and more

|

|

||||||

|

|

||||||

**Open Source Benefits**:

|

|

||||||

- Full control over your data and infrastructure

|

|

||||||

- Customize prompts and behavior for your team's needs

|

|

||||||

- No vendor lock-in

|

|

||||||

- Community-driven development

|

|

||||||

|

|

||||||

## Features

|

|

||||||

|

|

||||||

<div style="text-align:left;">

|

|

||||||

|

|

||||||

PR-Agent and Qodo Merge offer comprehensive pull request functionalities integrated with various git providers:

|

|

||||||

|

|

||||||

| | | GitHub | GitLab | Bitbucket | Azure DevOps | Gitea |

|

|

||||||

|---------------------------------------------------------|----------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|:-----:|

|

|

||||||

| [TOOLS](https://qodo-merge-docs.qodo.ai/tools/) | [Describe](https://qodo-merge-docs.qodo.ai/tools/describe/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

|

||||||

| | [Review](https://qodo-merge-docs.qodo.ai/tools/review/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

|

||||||

| | [Improve](https://qodo-merge-docs.qodo.ai/tools/improve/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

|

||||||

| | [Ask](https://qodo-merge-docs.qodo.ai/tools/ask/) | ✅ | ✅ | ✅ | ✅ | |

|

|

||||||

| | ⮑ [Ask on code lines](https://qodo-merge-docs.qodo.ai/tools/ask/#ask-lines) | ✅ | ✅ | | | |

|

|

||||||

| | [Help Docs](https://qodo-merge-docs.qodo.ai/tools/help_docs/?h=auto#auto-approval) | ✅ | ✅ | ✅ | | |

|

|

||||||

| | [Update CHANGELOG](https://qodo-merge-docs.qodo.ai/tools/update_changelog/) | ✅ | ✅ | ✅ | ✅ | |

|

|

||||||

| | [Add Documentation](https://qodo-merge-docs.qodo.ai/tools/documentation/) 💎 | ✅ | ✅ | | | |

|

|

||||||

| | [Analyze](https://qodo-merge-docs.qodo.ai/tools/analyze/) 💎 | ✅ | ✅ | | | |

|

|

||||||

| | [Auto-Approve](https://qodo-merge-docs.qodo.ai/tools/improve/?h=auto#auto-approval) 💎 | ✅ | ✅ | ✅ | | |

|

|

||||||

| | [CI Feedback](https://qodo-merge-docs.qodo.ai/tools/ci_feedback/) 💎 | ✅ | | | | |

|

|

||||||

| | [Compliance](https://qodo-merge-docs.qodo.ai/tools/compliance/) 💎 | ✅ | ✅ | ✅ | | |

|

|

||||||

| | [Custom Prompt](https://qodo-merge-docs.qodo.ai/tools/custom_prompt/) 💎 | ✅ | ✅ | ✅ | | |

|

|

||||||

| | [Generate Custom Labels](https://qodo-merge-docs.qodo.ai/tools/custom_labels/) 💎 | ✅ | ✅ | | | |

|

|

||||||

| | [Generate Tests](https://qodo-merge-docs.qodo.ai/tools/test/) 💎 | ✅ | ✅ | | | |

|

|

||||||

| | [Implement](https://qodo-merge-docs.qodo.ai/tools/implement/) 💎 | ✅ | ✅ | ✅ | | |

|

|

||||||

| | [Scan Repo Discussions](https://qodo-merge-docs.qodo.ai/tools/scan_repo_discussions/) 💎 | ✅ | | | | |

|

|

||||||

| | [Similar Code](https://qodo-merge-docs.qodo.ai/tools/similar_code/) 💎 | ✅ | | | | |

|

|

||||||

| | [Utilizing Best Practices](https://qodo-merge-docs.qodo.ai/tools/improve/#best-practices) 💎 | ✅ | ✅ | ✅ | | |

|

|

||||||

| | [PR Chat](https://qodo-merge-docs.qodo.ai/chrome-extension/features/#pr-chat) 💎 | ✅ | | | | |

|

|

||||||

| | [PR to Ticket](https://qodo-merge-docs.qodo.ai/tools/pr_to_ticket/) 💎 | ✅ | ✅ | ✅ | | |

|

|

||||||

| | [Suggestion Tracking](https://qodo-merge-docs.qodo.ai/tools/improve/#suggestion-tracking) 💎 | ✅ | ✅ | | | |

|

|

||||||

| | | | | | | |

|

|

||||||

| [USAGE](https://qodo-merge-docs.qodo.ai/usage-guide/) | [CLI](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#local-repo-cli) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

|

||||||

| | [App / webhook](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-app) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

|

||||||

| | [Tagging bot](https://github.com/Codium-ai/pr-agent#try-it-now) | ✅ | | | | |

|

|

||||||

| | [Actions](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-action) | ✅ | ✅ | ✅ | ✅ | |

|

|

||||||

| | | | | | | |

|

|

||||||

| [CORE](https://qodo-merge-docs.qodo.ai/core-abilities/) | [Adaptive and token-aware file patch fitting](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/) | ✅ | ✅ | ✅ | ✅ | |

|

|

||||||

| | [Auto Best Practices 💎](https://qodo-merge-docs.qodo.ai/core-abilities/auto_best_practices/) | ✅ | | | | |

|

|

||||||

| | [Chat on code suggestions](https://qodo-merge-docs.qodo.ai/core-abilities/chat_on_code_suggestions/) | ✅ | ✅ | | | |

|

|

||||||

| | [Code Validation 💎](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/) | ✅ | ✅ | ✅ | ✅ | |

|

|

||||||

| | [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/) | ✅ | ✅ | ✅ | ✅ | |

|

|

||||||

| | [Fetching ticket context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/) | ✅ | ✅ | ✅ | | |

|

|

||||||

| | [Global and wiki configurations](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/) 💎 | ✅ | ✅ | ✅ | | |

|

|

||||||

| | [Impact Evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) 💎 | ✅ | ✅ | | | |

|

|

||||||

| | [Incremental Update](https://qodo-merge-docs.qodo.ai/core-abilities/incremental_update/) | ✅ | | | | |

|

|

||||||

| | [Interactivity](https://qodo-merge-docs.qodo.ai/core-abilities/interactivity/) | ✅ | ✅ | | | |

|

|

||||||

| | [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/) | ✅ | ✅ | ✅ | ✅ | |

|

|

||||||

| | [Multiple models support](https://qodo-merge-docs.qodo.ai/usage-guide/changing_a_model/) | ✅ | ✅ | ✅ | ✅ | |

|

|

||||||

| | [PR compression](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/) | ✅ | ✅ | ✅ | ✅ | |

|

|

||||||

| | [PR interactive actions](https://www.qodo.ai/images/pr_agent/pr-actions.mp4) 💎 | ✅ | ✅ | | | |

|

|

||||||

| | [RAG context enrichment](https://qodo-merge-docs.qodo.ai/core-abilities/rag_context_enrichment/) | ✅ | | ✅ | | |

|

|

||||||

| | [Self reflection](https://qodo-merge-docs.qodo.ai/core-abilities/self_reflection/) | ✅ | ✅ | ✅ | ✅ | |

|

|

||||||

| | [Static code analysis](https://qodo-merge-docs.qodo.ai/core-abilities/static_code_analysis/) 💎 | ✅ | ✅ | | | |

|

|

||||||

- 💎 means this feature is available only in [Qodo Merge](https://www.qodo.ai/pricing/)

|

|

||||||

|

|

||||||

[//]: # (- Support for additional git providers is described in [here](./docs/Full_environments.md))

|

|

||||||

___

|

|

||||||

|

|

||||||

## See It in Action

|

|

||||||

|

|

||||||

</div>

|

|

||||||

<h4><a href="https://github.com/Codium-ai/pr-agent/pull/530">/describe</a></h4>

|

|

||||||

<div align="center">

|

|

||||||

<p float="center">

|

|

||||||

<img src="https://www.codium.ai/images/pr_agent/describe_new_short_main.png" width="512">

|

|

||||||

</p>

|

|

||||||

</div>

|

|

||||||

<hr>

|

|

||||||

|

|

||||||

<h4><a href="https://github.com/Codium-ai/pr-agent/pull/732#issuecomment-1975099151">/review</a></h4>

|

|

||||||

<div align="center">

|

|

||||||

<p float="center">

|

|

||||||

<kbd>

|

|

||||||

<img src="https://www.codium.ai/images/pr_agent/review_new_short_main.png" width="512">

|

|

||||||

</kbd>

|

|

||||||

</p>

|

|

||||||

</div>

|

|

||||||

<hr>

|

|

||||||

|

|

||||||

<h4><a href="https://github.com/Codium-ai/pr-agent/pull/732#issuecomment-1975099159">/improve</a></h4>

|

|

||||||

<div align="center">

|

|

||||||

<p float="center">

|

|

||||||

<kbd>

|

|

||||||

<img src="https://www.codium.ai/images/pr_agent/improve_new_short_main.png" width="512">

|

|

||||||

</kbd>

|

|

||||||

</p>

|

|

||||||

</div>

|

|

||||||

|

|

||||||

<div align="left">

|

|

||||||

|

|

||||||

</div>

|

|

||||||

<hr>

|

|

||||||

|

|

||||||

## Try It Now

|

|

||||||

|

|

||||||

Try the GPT-5 powered PR-Agent instantly on _your public GitHub repository_. Just mention `@CodiumAI-Agent` and add the desired command in any PR comment. The agent will generate a response based on your command.

|

|

||||||

For example, add a comment to any pull request with the following text:

|

|

||||||

|

|

||||||

```

|

|

||||||

@CodiumAI-Agent /review

|

|

||||||

```

|

|

||||||

|

|

||||||

and the agent will respond with a review of your PR.

|

|

||||||

|

|

||||||

Note that this is a promotional bot, suitable only for initial experimentation.

|

|

||||||

It does not have 'edit' access to your repo, for example, so it cannot update the PR description or add labels (`@CodiumAI-Agent /describe` will publish PR description as a comment). In addition, the bot cannot be used on private repositories, as it does not have access to the files there.

|

|

||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

## Qodo Merge 💎

|

## ❤️ Community

|

||||||

|

This open-source release remains here as a community contribution from Qodo — the origin of modern AI-powered code collaboration.

|

||||||

[Qodo Merge](https://www.qodo.ai/pricing/) is a separate, enterprise-grade product that originated from the open-source PR-Agent.

|

We’re proud to share it and inspire developers worldwide.

|

||||||

|

|

||||||

### Key Differences from Open Source PR-Agent:

|

|

||||||

|

|

||||||

**Infrastructure & Management:**

|

|

||||||

- Fully managed hosting and automatic updates

|

|

||||||

- Zero-setup installation (GitHub/GitLab/BitBucket app)

|

|

||||||

- No need to manage API keys or infrastructure

|

|

||||||

|

|

||||||

**Enhanced Privacy:**

|

|

||||||

- Zero data retention policy

|

|

||||||

- No data used for model training

|

|

||||||

- Enterprise-grade security

|

|

||||||

|

|

||||||

**Additional Features:**

|

|

||||||

- Advanced code suggestions with tracking

|

|

||||||

- CI feedback analysis

|

|

||||||

- Custom prompts and labels

|

|

||||||

- Static code analysis integration

|

|

||||||

- Priority support

|

|

||||||

|

|

||||||

**Pricing:**

|

|

||||||

- Free tier: 75 PR reviews/month per organization

|

|

||||||

- Paid plans for unlimited usage

|

|

||||||

- Free for open source projects

|

|

||||||

|

|

||||||

See [complete feature comparison](https://qodo-merge-docs.qodo.ai/overview/pr_agent_pro/) for detailed differences.

|

|

||||||

|

|

||||||

## How It Works

|

|

||||||

|

|

||||||

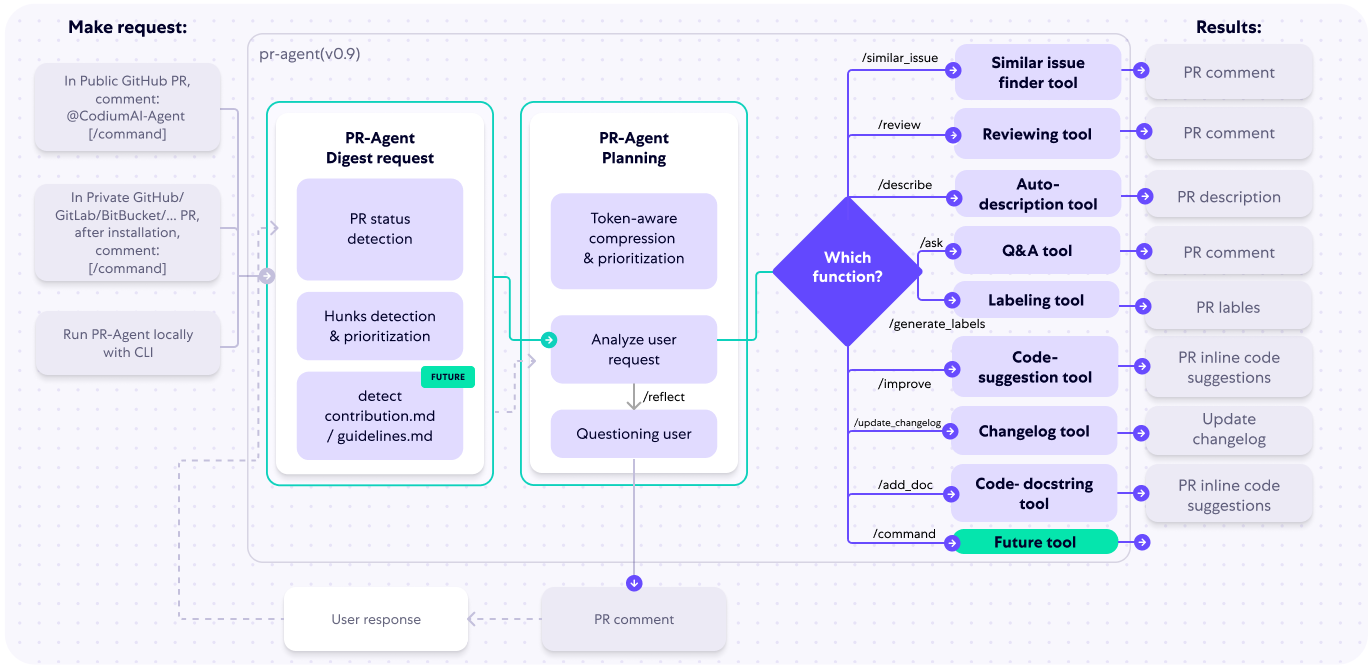

The following diagram illustrates PR-Agent tools and their flow:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Check out the [PR Compression strategy](https://qodo-merge-docs.qodo.ai/core-abilities/#pr-compression-strategy) page for more details on how we convert a code diff to a manageable LLM prompt

|

|

||||||

|

|

||||||

## Data Privacy

|

|

||||||

|

|

||||||

### Self-hosted PR-Agent

|

|

||||||

|

|

||||||

- If you host PR-Agent with your OpenAI API key, it is between you and OpenAI. You can read their API data privacy policy here:

|

|

||||||

https://openai.com/enterprise-privacy

|

|

||||||

|

|

||||||

### Qodo-hosted Qodo Merge 💎

|

|

||||||

|

|

||||||

- When using Qodo Merge 💎, hosted by Qodo, we will not store any of your data, nor will we use it for training. You will also benefit from an OpenAI account with zero data retention.

|

|

||||||

|

|

||||||

- For certain clients, Qodo-hosted Qodo Merge will use Qodo’s proprietary models — if this is the case, you will be notified.

|

|

||||||

|

|

||||||

- No passive collection of Code and Pull Requests’ data — Qodo Merge will be active only when you invoke it, and it will then extract and analyze only data relevant to the executed command and queried pull request.

|

|

||||||

|

|

||||||

### Qodo Merge Chrome extension

|

|

||||||

|

|

||||||

- The [Qodo Merge Chrome extension](https://chromewebstore.google.com/detail/qodo-merge-ai-powered-cod/ephlnjeghhogofkifjloamocljapahnl) serves solely to modify the visual appearance of a GitHub PR screen. It does not transmit any user's repo or pull request code. Code is only sent for processing when a user submits a GitHub comment that activates a PR-Agent tool, in accordance with the standard privacy policy of Qodo-Merge.

|

|

||||||

|

|

||||||

## Contributing

|

|

||||||

|

|

||||||

To contribute to the project, get started by reading our [Contributing Guide](https://github.com/qodo-ai/pr-agent/blob/b09eec265ef7d36c232063f76553efb6b53979ff/CONTRIBUTING.md).

|

|

||||||

|

|

||||||

## Links

|

|

||||||

|

|

||||||

- Discord community: https://discord.com/invite/SgSxuQ65GF

|

|

||||||

- Qodo site: https://www.qodo.ai/

|

|

||||||

- Blog: https://www.qodo.ai/blog/

|

|

||||||

- Troubleshooting: https://www.qodo.ai/blog/technical-faq-and-troubleshooting/

|

|

||||||

- Support: support@qodo.ai

|

|

||||||

|

|

|

||||||

|

|

@ -54,17 +54,17 @@ A `PR Code Verified` label indicates the PR code meets ticket requirements, but

|

||||||

|

|

||||||

#### Configuration options

|

#### Configuration options

|

||||||

|

|

||||||

-

|

-

|

||||||

|

|

||||||

By default, the tool will automatically validate if the PR complies with the referenced ticket.

|

By default, the `review` tool will automatically validate if the PR complies with the referenced ticket.

|

||||||

If you want to disable this feedback, add the following line to your configuration file:

|

If you want to disable this feedback, add the following line to your configuration file:

|

||||||

|

|

||||||

```toml

|

```toml

|

||||||

[pr_reviewer]

|

[pr_reviewer]

|

||||||

require_ticket_analysis_review=false

|

require_ticket_analysis_review=false

|

||||||

```

|

```

|

||||||

|

|

||||||

-

|

-

|

||||||

|

|

||||||

If you set:

|

If you set:

|

||||||

```toml

|

```toml

|

||||||

|

|

@ -72,9 +72,36 @@ A `PR Code Verified` label indicates the PR code meets ticket requirements, but

|

||||||

check_pr_additional_content=true

|

check_pr_additional_content=true

|

||||||

```

|

```

|

||||||

(default: `false`)

|

(default: `false`)

|

||||||

|

|

||||||

the `review` tool will also validate that the PR code doesn't contain any additional content that is not related to the ticket. If it does, the PR will be labeled at best as `PR Code Verified`, and the `review` tool will provide a comment with the additional unrelated content found in the PR code.

|

the `review` tool will also validate that the PR code doesn't contain any additional content that is not related to the ticket. If it does, the PR will be labeled at best as `PR Code Verified`, and the `review` tool will provide a comment with the additional unrelated content found in the PR code.

|

||||||

|

|

||||||

|

### Compliance tool

|

||||||

|

|

||||||

|

The `compliance` tool also uses ticket context to validate that PR changes fulfill the requirements specified in linked tickets.

|

||||||

|

|

||||||

|

#### Configuration options

|

||||||

|

|

||||||

|

-

|

||||||

|

|

||||||

|

By default, the `compliance` tool will automatically validate if the PR complies with the referenced ticket.

|

||||||

|

If you want to disable ticket compliance checking in the compliance tool, add the following line to your configuration file:

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[pr_compliance]

|

||||||

|

require_ticket_analysis_review=false

|

||||||

|

```

|

||||||

|

|

||||||

|

-

|

||||||

|

|

||||||

|

If you set:

|

||||||

|

```toml

|

||||||

|

[pr_compliance]

|

||||||

|

check_pr_additional_content=true

|

||||||

|

```

|

||||||

|

(default: `false`)

|

||||||

|

|

||||||

|

the `compliance` tool will also validate that the PR code doesn't contain any additional content that is not related to the ticket.

|

||||||

|

|

||||||

## GitHub/Gitlab Issues Integration

|

## GitHub/Gitlab Issues Integration

|

||||||

|

|

||||||

Qodo Merge will automatically recognize GitHub/Gitlab issues mentioned in the PR description and fetch the issue content.

|

Qodo Merge will automatically recognize GitHub/Gitlab issues mentioned in the PR description and fetch the issue content.

|

||||||

|

|

|

||||||

|

|

@ -8,7 +8,7 @@ By combining static code analysis with LLM capabilities, Qodo Merge can provide

|

||||||

It scans the PR code changes, finds all the code components (methods, functions, classes) that changed, and enables to interactively generate tests, docs, code suggestions and similar code search for each component.

|

It scans the PR code changes, finds all the code components (methods, functions, classes) that changed, and enables to interactively generate tests, docs, code suggestions and similar code search for each component.

|

||||||

|

|

||||||

!!! note "Language that are currently supported:"

|

!!! note "Language that are currently supported:"

|

||||||

Python, Java, C++, JavaScript, TypeScript, C#, Go, Ruby, PHP, Rust, Kotlin, Scala

|

Python, Java, C++, JavaScript, TypeScript, C#, Go, Kotlin

|

||||||

|

|

||||||

## Capabilities

|

## Capabilities

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -70,18 +70,36 @@ A list of the models used for generating the baseline suggestions, and example r

|

||||||

<td style="text-align:left;">'medium' (<a href="https://ai.google.dev/gemini-api/docs/openai">8000</a>)</td>

|

<td style="text-align:left;">'medium' (<a href="https://ai.google.dev/gemini-api/docs/openai">8000</a>)</td>

|

||||||

<td style="text-align:center;"><b>57.7</b></td>

|

<td style="text-align:center;"><b>57.7</b></td>

|

||||||

</tr>

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td style="text-align:left;">Gemini-3-pro-review</td>

|

||||||

|

<td style="text-align:left;">2025-11-18</td>

|

||||||

|

<td style="text-align:left;">high</td>

|

||||||

|

<td style="text-align:center;"><b>57.3</b></td>

|

||||||

|

</tr>

|

||||||

<tr>

|

<tr>

|

||||||

<td style="text-align:left;">Gemini-2.5-pro</td>

|

<td style="text-align:left;">Gemini-2.5-pro</td>

|

||||||

<td style="text-align:left;">2025-06-05</td>

|

<td style="text-align:left;">2025-06-05</td>

|

||||||

<td style="text-align:left;">4096</td>

|

<td style="text-align:left;">4096</td>

|

||||||

<td style="text-align:center;"><b>56.3</b></td>

|

<td style="text-align:center;"><b>56.3</b></td>

|

||||||

</tr>

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td style="text-align:left;">Gemini-3-pro-review</td>

|

||||||

|

<td style="text-align:left;">2025-11-18</td>

|

||||||

|

<td style="text-align:left;">low</td>

|

||||||

|

<td style="text-align:center;"><b>55.6</b></td>

|

||||||

|

</tr>

|

||||||

<tr>

|

<tr>

|

||||||

<td style="text-align:left;">Claude-haiku-4.5</td>

|

<td style="text-align:left;">Claude-haiku-4.5</td>

|

||||||

<td style="text-align:left;">2025-10-01</td>

|

<td style="text-align:left;">2025-10-01</td>

|

||||||

<td style="text-align:left;">4096</td>

|

<td style="text-align:left;">4096</td>

|

||||||

<td style="text-align:center;"><b>48.8</b></td>

|

<td style="text-align:center;"><b>48.8</b></td>

|

||||||

</tr>

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td style="text-align:left;">GPT-5.1</td>

|

||||||

|

<td style="text-align:left;">2025-11-13</td>

|

||||||

|

<td style="text-align:left;">medium</td>

|

||||||

|

<td style="text-align:center;"><b>44.9</b></td>

|

||||||

|

</tr>

|

||||||

<tr>

|

<tr>

|

||||||

<td style="text-align:left;">Gemini-2.5-pro</td>

|

<td style="text-align:left;">Gemini-2.5-pro</td>

|

||||||

<td style="text-align:left;">2025-06-05</td>

|

<td style="text-align:left;">2025-06-05</td>

|

||||||

|

|

@ -148,6 +166,12 @@ A list of the models used for generating the baseline suggestions, and example r

|

||||||

<td style="text-align:left;"></td>

|

<td style="text-align:left;"></td>

|

||||||

<td style="text-align:center;"><b>32.4</b></td>

|

<td style="text-align:center;"><b>32.4</b></td>

|

||||||

</tr>

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td style="text-align:left;">Claude-opus-4.5</td>

|

||||||

|

<td style="text-align:left;">2025-11-01</td>

|

||||||

|

<td style="text-align:left;">high</td>

|

||||||

|

<td style="text-align:center;"><b>30.3</b></td>

|

||||||

|

</tr>

|

||||||

<tr>

|

<tr>

|

||||||

<td style="text-align:left;">GPT-4.1</td>

|

<td style="text-align:left;">GPT-4.1</td>

|

||||||

<td style="text-align:left;">2025-04-14</td>

|

<td style="text-align:left;">2025-04-14</td>

|

||||||

|

|

@ -157,7 +181,7 @@ A list of the models used for generating the baseline suggestions, and example r

|

||||||

</tbody>

|

</tbody>

|

||||||

</table>

|

</table>

|

||||||

|

|

||||||

## Results Analysis

|

## Results Analysis (Latest Additions)

|

||||||

|

|

||||||

### GPT-5-pro

|

### GPT-5-pro

|

||||||

|

|

||||||

|

|

@ -212,6 +236,23 @@ Weaknesses:

|

||||||

- **False or harmful fixes:** Several answers introduce new compilation errors, propose out-of-scope changes, or violate explicit rules (e.g., adding imports, version bumps, touching untouched lines), reducing trustworthiness.

|

- **False or harmful fixes:** Several answers introduce new compilation errors, propose out-of-scope changes, or violate explicit rules (e.g., adding imports, version bumps, touching untouched lines), reducing trustworthiness.

|

||||||

- **Shallow coverage:** Even when it identifies one real issue it often stops there, missing additional critical problems found by stronger peers; breadth and depth are inconsistent.

|

- **Shallow coverage:** Even when it identifies one real issue it often stops there, missing additional critical problems found by stronger peers; breadth and depth are inconsistent.

|

||||||

|

|

||||||

|

### Gemini-3-pro-review (high thinking budget)

|

||||||

|

|

||||||

|

Final score: **57.3**

|

||||||

|

|

||||||

|

Strengths:

|

||||||

|

|

||||||

|

- **Good schema & format discipline:** Consistently returns well-formed YAML with correct fields and respects the 3-suggestion limit; rarely breaks the required output structure.

|

||||||

|

- **Reasonable guideline awareness:** Often recognises when a diff contains only data / translations and properly emits an empty list, avoiding over-reporting.

|

||||||

|

- **Clear, actionable patches when correct:** When it does find a bug it usually supplies minimal-diff, compilable code snippets with concise explanations, and occasionally surfaces issues no other model spotted.

|

||||||

|

|

||||||

|

Weaknesses:

|

||||||

|

|

||||||

|

- **Spot-coverage gaps on critical defects:** In a large share of cases it overlooks the principal regression the tests were written for, while fixating on minor style or performance nits.

|

||||||

|

- **False or speculative fixes:** A noticeable number of answers invent non-existent problems or propose changes that would not compile or would re-introduce removed behaviour.

|

||||||

|

- **Guideline violations creep in:** Sometimes touches unchanged lines, adds forbidden imports / labels, or supplies more than "critical" advice, showing imperfect rule adherence.

|

||||||

|

- **High variance / inconsistency:** Quality swings from best-in-class to harmful within consecutive examples, indicating unstable defect-prioritisation and review depth.

|

||||||

|

|

||||||

### Gemini-2.5 Pro (4096 thinking tokens)

|

### Gemini-2.5 Pro (4096 thinking tokens)

|

||||||

|

|

||||||

Final score: **56.3**

|

Final score: **56.3**

|

||||||

|

|

@ -230,6 +271,23 @@ Weaknesses:

|

||||||

- **False positives / speculative fixes:** In several cases it flags non-issues (style, performance, redundant code) or supplies debatable “improvements”, lowering precision and sometimes breaching the “critical bugs only” rule.

|

- **False positives / speculative fixes:** In several cases it flags non-issues (style, performance, redundant code) or supplies debatable “improvements”, lowering precision and sometimes breaching the “critical bugs only” rule.

|

||||||

- **Inconsistent error coverage:** For certain domains (build scripts, schema files, test code) it either returns an empty list when real regressions exist or proposes cosmetic edits, indicating gaps in specialised knowledge.

|

- **Inconsistent error coverage:** For certain domains (build scripts, schema files, test code) it either returns an empty list when real regressions exist or proposes cosmetic edits, indicating gaps in specialised knowledge.

|

||||||

|

|

||||||

|

### Gemini-3-pro-review (low thinking budget)

|

||||||

|

|

||||||

|

Final score: **55.6**

|

||||||

|

|

||||||

|

Strengths:

|

||||||

|

|

||||||

|

- **Concise, well-structured patches:** Suggestions are usually expressed in short, self-contained YAML items with clear before/after code blocks and just enough rationale, making them easy for reviewers to apply.

|

||||||

|

- **Good eye for crash-level defects:** When the model does spot a problem it often focuses on high-impact issues such as compile-time errors, NPEs, nil-pointer races, buffer overflows, etc., and supplies a minimal, correct fix.

|

||||||

|

- **High guideline compliance (format & scope):** In most cases it respects the 1-3-item limit and the "new lines only" rule, avoids changing imports, and keeps snippets syntactically valid.

|

||||||

|

|

||||||

|

Weaknesses:

|

||||||

|

|

||||||

|

- **Coverage inconsistency:** Many answers miss other obvious or even more critical regressions spotted by peers; breadth fluctuates from excellent to empty, leaving reviewers with partial insight.

|

||||||

|

- **False positives & speculative advice:** A noticeable share of suggestions target stylistic or non-critical tweaks, or even introduce wrong changes, betraying occasional mis-reading of the diff and hurting trust.

|

||||||

|

- **Rule violations still occur:** There are repeated instances of touching unchanged code, recommending version bumps/imports, mis-labelling severities, or outputting malformed snippets—showing lapses in instruction adherence.

|

||||||

|

- **Quality variance / empty outputs:** Some responses provide no suggestions despite real bugs, while others supply harmful fixes; this volatility lowers overall reliability.

|

||||||

|

|

||||||

### Claude-haiku-4.5 (4096 thinking tokens)

|

### Claude-haiku-4.5 (4096 thinking tokens)

|

||||||

|

|

||||||

Final score: **48.8**

|

Final score: **48.8**

|

||||||

|

|

@ -247,6 +305,22 @@ Weaknesses:

|

||||||

- **Inconsistent output robustness:** Several cases show truncated or malformed responses, reducing value despite correct analysis elsewhere.

|

- **Inconsistent output robustness:** Several cases show truncated or malformed responses, reducing value despite correct analysis elsewhere.

|

||||||

- **Frequent false negatives:** The model sometimes returns an empty list even when clear regressions exist, indicating conservative behaviour that misses mandatory fixes.

|

- **Frequent false negatives:** The model sometimes returns an empty list even when clear regressions exist, indicating conservative behaviour that misses mandatory fixes.

|

||||||

|

|

||||||

|

### GPT-5.1 ('medium' thinking budget)

|

||||||

|

|

||||||

|

Final score: **44.9**

|

||||||

|

|

||||||

|

Strengths:

|

||||||

|

|

||||||

|

- **High precision & guideline compliance:** When the model does emit suggestions they are almost always technically sound, respect the "new-lines-only / ≤3 suggestions / no-imports" rules, and are formatted correctly. It rarely introduces harmful changes and often provides clear, runnable patches.

|

||||||

|

- **Ability to spot subtle or unique defects:** In several cases the model caught a critical issue that most or all baselines missed, showing good deep-code reasoning when it does engage.

|

||||||

|

- **Good judgment on noise-free diffs:** On purely data or documentation changes the model frequently (and correctly) returns an empty list, avoiding false-positive "nit" feedback.

|

||||||

|

|

||||||

|

Weaknesses:

|

||||||

|

|

||||||

|

- **Very low recall / over-conservatism:** In a large fraction of examples it outputs an empty suggestion list while clear critical bugs exist (well over 50 % of cases), making it inferior to almost every baseline answer that offered any fix.

|

||||||

|

- **Narrow coverage when it speaks:** Even when it flags one bug, it often stops there and ignores other equally critical problems present in the same diff, leaving reviewers with partial insight.

|

||||||

|

- **Occasional misdiagnosis or harmful fix:** A minority of suggestions are wrong or counter-productive, showing that precision, while good, is not perfect.

|

||||||

|

|

||||||

### Claude-sonnet-4.5 (4096 thinking tokens)

|

### Claude-sonnet-4.5 (4096 thinking tokens)

|

||||||

|

|

||||||

Final score: **44.2**

|

Final score: **44.2**

|

||||||

|

|

@ -298,43 +372,6 @@ Weaknesses:

|

||||||

- **Guideline slips: In several examples it edits unchanged lines, adds forbidden imports/version bumps, mis-labels severities, or supplies non-critical stylistic advice.

|

- **Guideline slips: In several examples it edits unchanged lines, adds forbidden imports/version bumps, mis-labels severities, or supplies non-critical stylistic advice.

|

||||||

- **Inconsistent diligence: Roughly a quarter of the cases return an empty list despite real problems, while others duplicate existing PR changes, indicating weak diff comprehension.

|

- **Inconsistent diligence: Roughly a quarter of the cases return an empty list despite real problems, while others duplicate existing PR changes, indicating weak diff comprehension.

|

||||||

|

|

||||||

### Claude-4 Sonnet (4096 thinking tokens)

|

|

||||||

|

|

||||||

Final score: **39.7**

|

|

||||||

|

|

||||||

Strengths:

|

|

||||||

|

|

||||||

- **High guideline & format compliance:** Almost always returns valid YAML, keeps ≤ 3 suggestions, avoids forbidden import/boiler-plate changes and provides clear before/after snippets.

|

|

||||||

- **Good pinpoint accuracy on single issues:** Frequently spots at least one real critical bug and proposes a concise, technically correct fix that compiles/runs.

|

|

||||||

- **Clarity & brevity of patches:** Explanations are short, actionable, and focused on changed lines, making the advice easy for reviewers to apply.

|

|

||||||

|

|

||||||

Weaknesses:

|

|

||||||

|

|

||||||

- **Low coverage / recall:** Regularly surfaces only one minor issue (or none) while missing other, often more severe, problems caught by peer models.

|

|

||||||

- **High "empty-list" rate:** In many diffs the model returns no suggestions even when clear critical bugs exist, offering zero reviewer value.

|

|

||||||

- **Occasional incorrect or harmful fixes:** A non-trivial number of suggestions are speculative, contradict code intent, or would break compilation/runtime; sometimes duplicates or contradicts itself.

|

|

||||||

- **Inconsistent severity labelling & duplication:** Repeats the same point in multiple slots, marks cosmetic edits as "critical", or leaves `improved_code` identical to original.

|

|

||||||

|

|

||||||

|

|

||||||

### Claude-4 Sonnet

|

|

||||||

|

|

||||||

Final score: **39.0**

|

|

||||||

|

|

||||||

Strengths:

|

|

||||||

|

|

||||||

- **Consistently well-formatted & rule-compliant output:** Almost every answer follows the required YAML schema, keeps within the 3-suggestion limit, and returns an empty list when no issues are found, showing good instruction following.

|

|

||||||

|

|

||||||

- **Actionable, code-level patches:** When it does spot a defect the model usually supplies clear, minimal diffs or replacement snippets that compile / run, making the fix easy to apply.

|

|

||||||

|

|

||||||

- **Decent hit-rate on “obvious” bugs:** The model reliably catches the most blatant syntax errors, null-checks, enum / cast problems, and other first-order issues, so it often ties or slightly beats weaker baseline replies.

|

|

||||||

|

|

||||||

Weaknesses:

|

|

||||||

|

|

||||||

- **Shallow coverage:** It frequently stops after one easy bug and overlooks additional, equally-critical problems that stronger reviewers find, leaving significant risks unaddressed.

|

|

||||||

|

|

||||||

- **False positives & harmful fixes:** In a noticeable minority of cases it misdiagnoses code, suggests changes that break compilation or behaviour, or flags non-issues, sometimes making its output worse than doing nothing.

|

|

||||||

|

|

||||||

- **Drifts into non-critical or out-of-scope advice:** The model regularly proposes style tweaks, documentation edits, or changes to unchanged lines, violating the "critical new-code only" requirement.

|

|

||||||

|

|

||||||

### OpenAI codex-mini

|

### OpenAI codex-mini

|

||||||

|

|

||||||

|

|

@ -392,33 +429,33 @@ Final score: **32.8**

|

||||||

|

|

||||||

Strengths:

|

Strengths:

|

||||||

|

|

||||||

- **Focused and concise fixes:** When the model does detect a problem it usually proposes a minimal, well-scoped patch that compiles and directly addresses the defect without unnecessary noise.

|

- **Focused and concise fixes:** When the model does detect a problem it usually proposes a minimal, well-scoped patch that compiles and directly addresses the defect without unnecessary noise.

|

||||||

- **Good critical-bug instinct:** It often prioritises show-stoppers (compile failures, crashes, security issues) over cosmetic matters and occasionally spots subtle issues that all other reviewers miss.

|

- **Good critical-bug instinct:** It often prioritises show-stoppers (compile failures, crashes, security issues) over cosmetic matters and occasionally spots subtle issues that all other reviewers miss.

|

||||||

- **Clear explanations & snippets:** Explanations are short, readable and paired with ready-to-paste code, making the advice easy to apply.

|

- **Clear explanations & snippets:** Explanations are short, readable and paired with ready-to-paste code, making the advice easy to apply.

|

||||||

|

|

||||||

Weaknesses:

|

Weaknesses:

|

||||||

|

|

||||||

- **High miss rate:** In a large fraction of examples the model returned an empty list or covered only one minor issue while overlooking more serious newly-introduced bugs.

|

- **High miss rate:** In a large fraction of examples the model returned an empty list or covered only one minor issue while overlooking more serious newly-introduced bugs.

|

||||||

- **Inconsistent accuracy:** A noticeable subset of answers contain wrong or even harmful fixes (e.g., removing valid flags, creating compile errors, re-introducing bugs).

|

- **Inconsistent accuracy:** A noticeable subset of answers contain wrong or even harmful fixes (e.g., removing valid flags, creating compile errors, re-introducing bugs).

|

||||||

- **Limited breadth:** Even when it finds a real defect it rarely reports additional related problems that peers catch, leading to partial reviews.

|

- **Limited breadth:** Even when it finds a real defect it rarely reports additional related problems that peers catch, leading to partial reviews.

|

||||||

- **Occasional guideline slips:** A few replies modify unchanged lines, suggest new imports, or duplicate suggestions, showing imperfect compliance with instructions.

|

- **Occasional guideline slips:** A few replies modify unchanged lines, suggest new imports, or duplicate suggestions, showing imperfect compliance with instructions.

|

||||||

|

|

||||||

### GPT-4.1

|

### Claude-Opus-4.5 (high thinking budget)

|

||||||

|

|

||||||

Final score: **26.5**

|

Final score: **30.3**

|

||||||

|

|

||||||

Strengths:

|

Strengths:

|

||||||

|

|

||||||

- **Consistent format & guideline obedience:** Output is almost always valid YAML, within the 3-suggestion limit, and rarely touches lines not prefixed with "+".

|

- **High rule compliance & formatting:** Consistently produces valid YAML, respects the ≤3-suggestion limit, and usually confines edits to added lines, avoiding many guideline violations seen in peers.

|

||||||

- **Low false-positive rate:** When no real defect exists, the model correctly returns an empty list instead of inventing speculative fixes, avoiding the "noise" many baseline answers add.

|

- **Low false-positive rate:** Tends to stay silent unless convinced of a real problem; when the diff is a pure version bump / docs tweak it often (correctly) returns an empty list, beating noisier baselines.

|

||||||

- **Clear, concise patches when it does act:** In the minority of cases where it detects a bug, the fix is usually correct, minimal, and easy to apply.

|

- **Clear, focused patches when it fires:** In the minority of cases where it does spot a bug, it explains the issue crisply and supplies concise, copy-paste-able code snippets.

|

||||||

|

|

||||||

Weaknesses:

|

Weaknesses:

|

||||||

|

|

||||||

- **Very low recall / coverage:** In a large majority of examples it outputs an empty list or only 1 trivial suggestion while obvious critical issues remain unfixed; it systematically misses circular bugs, null-checks, schema errors, etc.

|

- **Very low recall:** In the vast majority of examples it misses obvious critical issues or suggests only a subset, frequently returning an empty list; this places it below most baselines on overall usefulness.

|

||||||

- **Shallow analysis:** Even when it finds one problem it seldom looks deeper, so more severe or additional bugs in the same diff are left unaddressed.

|

- **Shallow coverage:** Even when it catches a defect it typically lists a single point and overlooks other high-impact problems present in the same diff.

|

||||||

- **Occasional technical inaccuracies:** A noticeable subset of suggestions are wrong (mis-ordered assertions, harmful Bash `set` change, false dangling-reference claims) or carry metadata errors (mis-labeling files as "python").

|

- **Occasional incorrect or incomplete fixes:** A non-trivial number of suggestions are wrong, compile-breaking, duplicate unchanged code, or touch out-of-scope lines, reducing trust.

|

||||||

- **Repetitive / derivative fixes:** Many outputs duplicate earlier simplistic ideas (e.g., single null-check) without new insight, showing limited reasoning breadth.

|

- **Inconsistent severity tagging & duplication:** Sometimes mis-labels critical vs general, repeats the same suggestion, or leaves `improved_code` blocks empty.

|

||||||

|

|

||||||

## Appendix - Example Results

|

## Appendix - Example Results

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -16,5 +16,5 @@ An example result:

|

||||||

|

|

||||||

{width=750}

|

{width=750}

|

||||||

|

|

||||||

!!! note "Language that are currently supported:"

|

!!! note "Languages that are currently supported:"

|

||||||

Python, Java, C++, JavaScript, TypeScript, C#, Go, Ruby, PHP, Rust, Kotlin, Scala

|

Python, Java, C++, JavaScript, TypeScript, C#, Go, Kotlin

|

||||||

|

|

|

||||||

|

|

@ -329,6 +329,10 @@ enable_global_pr_compliance = true

|

||||||

???+ example "Ticket compliance options"

|

???+ example "Ticket compliance options"

|

||||||

|

|

||||||

<table>

|

<table>

|

||||||

|

<tr>

|

||||||

|

<td><b>require_ticket_analysis_review</b></td>

|

||||||

|

<td>If set to true, the tool will fetch and analyze ticket context for compliance validation. Default is true.</td>

|

||||||

|

</tr>

|

||||||

<tr>

|

<tr>

|

||||||

<td><b>enable_ticket_labels</b></td>

|

<td><b>enable_ticket_labels</b></td>

|

||||||

<td>If set to true, the tool will add ticket compliance labels to the PR. Default is false.</td>

|

<td>If set to true, the tool will add ticket compliance labels to the PR. Default is false.</td>

|

||||||

|

|

|

||||||

|

|

@ -20,7 +20,7 @@ The tool will generate code suggestions for the selected component (if no compon

|

||||||

{width=768}

|

{width=768}

|

||||||

|

|

||||||

!!! note "Notes"

|

!!! note "Notes"

|

||||||

- Language that are currently supported by the tool: `Python, Java, C++, JavaScript, TypeScript, C#, Go, Ruby, PHP, Rust, Kotlin, Scala`

|

- Languages that are currently supported by the tool: `Python, Java, C++, JavaScript, TypeScript, C#, Go, Kotlin`

|

||||||

- This tool can also be triggered interactively by using the [`analyze`](./analyze.md) tool.

|

- This tool can also be triggered interactively by using the [`analyze`](./analyze.md) tool.

|

||||||

|

|

||||||

## Configuration options

|

## Configuration options

|

||||||

|

|

|

||||||

|

|

@ -20,7 +20,7 @@ The tool will generate tests for the selected component (if no component is stat

|

||||||

(Example taken from [here](https://github.com/Codium-ai/pr-agent/pull/598#issuecomment-1913679429)):

|

(Example taken from [here](https://github.com/Codium-ai/pr-agent/pull/598#issuecomment-1913679429)):

|

||||||

|

|

||||||

!!! note "Notes"

|

!!! note "Notes"

|

||||||

- The following languages are currently supported: `Python, Java, C++, JavaScript, TypeScript, C#, Go, Ruby, PHP, Rust, Kotlin, Scala`

|

- The following languages are currently supported: `Python, Java, C++, JavaScript, TypeScript, C#, Go, Kotlin`

|

||||||

- This tool can also be triggered interactively by using the [`analyze`](./analyze.md) tool.

|

- This tool can also be triggered interactively by using the [`analyze`](./analyze.md) tool.

|

||||||

|

|

||||||

## Configuration options

|

## Configuration options

|

||||||

|

|

|

||||||

|

|

@ -58,7 +58,7 @@ Then you can give a list of extra instructions to the `review` tool.

|

||||||

|

|

||||||

## Global configuration file 💎

|

## Global configuration file 💎

|

||||||

|

|

||||||

`Platforms supported: GitHub, GitLab, Bitbucket`

|

`Platforms supported: GitHub, GitLab (cloud), Bitbucket (cloud)`

|

||||||

|

|

||||||

If you create a repo called `pr-agent-settings` in your **organization**, its configuration file `.pr_agent.toml` will be used as a global configuration file for any other repo that belongs to the same organization.

|

If you create a repo called `pr-agent-settings` in your **organization**, its configuration file `.pr_agent.toml` will be used as a global configuration file for any other repo that belongs to the same organization.

|

||||||

Parameters from a local `.pr_agent.toml` file, in a specific repo, will override the global configuration parameters.

|

Parameters from a local `.pr_agent.toml` file, in a specific repo, will override the global configuration parameters.

|

||||||

|

|

@ -69,18 +69,21 @@ For example, in the GitHub organization `Codium-ai`:

|

||||||

|

|

||||||

- The repo [`https://github.com/Codium-ai/pr-agent`](https://github.com/Codium-ai/pr-agent/blob/main/.pr_agent.toml) inherits the global configuration file from `pr-agent-settings`.

|

- The repo [`https://github.com/Codium-ai/pr-agent`](https://github.com/Codium-ai/pr-agent/blob/main/.pr_agent.toml) inherits the global configuration file from `pr-agent-settings`.

|

||||||

|

|

||||||

### Bitbucket Organization level configuration file 💎

|

## Project/Group level configuration file 💎

|

||||||

|

|

||||||

|

`Platforms supported: GitLab, Bitbucket Data Center`

|

||||||

|

|

||||||

|

Create a repository named `pr-agent-settings` within a specific project (Bitbucket) or a group/subgroup (Gitlab).

|

||||||

|

The configuration file in this repository will apply to all repositories directly under the same project/group/subgroup.

|

||||||

|

|

||||||

|

!!! note "Note"

|

||||||

|

For Gitlab, in case of a repository nested in several sub groups, the lookup for a pr-agent-settings repo will be only on one level above such repository.

|

||||||

|

|

||||||

|

|

||||||

|

## Organization level configuration file 💎

|

||||||

|

|

||||||

`Relevant platforms: Bitbucket Data Center`

|

`Relevant platforms: Bitbucket Data Center`

|

||||||

|

|

||||||

In Bitbucket Data Center, there are two levels where you can define a global configuration file:

|

|

||||||

|

|

||||||

- Project-level global configuration:

|

|

||||||

|

|

||||||

Create a repository named `pr-agent-settings` within a specific project. The configuration file in this repository will apply to all repositories under the same project.

|

|

||||||

|

|

||||||

- Organization-level global configuration:

|

|

||||||

|

|

||||||

Create a dedicated project to hold a global configuration file that affects all repositories across all projects in your organization.

|

Create a dedicated project to hold a global configuration file that affects all repositories across all projects in your organization.

|

||||||

|

|

||||||

**Setting up organization-level global configuration:**

|

**Setting up organization-level global configuration:**

|

||||||

|

|

|

||||||

|

|

@ -32,6 +32,11 @@ MAX_TOKENS = {

|

||||||

'gpt-5-mini': 200000, # 200K, but may be limited by config.max_model_tokens

|

'gpt-5-mini': 200000, # 200K, but may be limited by config.max_model_tokens

|

||||||

'gpt-5': 200000,

|

'gpt-5': 200000,

|

||||||

'gpt-5-2025-08-07': 200000,

|

'gpt-5-2025-08-07': 200000,

|

||||||

|

'gpt-5.1': 200000,

|

||||||

|

'gpt-5.1-2025-11-13': 200000,

|

||||||

|

'gpt-5.1-chat-latest': 200000,

|

||||||

|

'gpt-5.1-codex': 200000,

|

||||||

|

'gpt-5.1-codex-mini': 200000,

|

||||||

'o1-mini': 128000, # 128K, but may be limited by config.max_model_tokens

|

'o1-mini': 128000, # 128K, but may be limited by config.max_model_tokens

|

||||||

'o1-mini-2024-09-12': 128000, # 128K, but may be limited by config.max_model_tokens

|

'o1-mini-2024-09-12': 128000, # 128K, but may be limited by config.max_model_tokens

|

||||||

'o1-preview': 128000, # 128K, but may be limited by config.max_model_tokens

|

'o1-preview': 128000, # 128K, but may be limited by config.max_model_tokens

|

||||||

|

|

|

||||||

|

|

@ -1,4 +1,3 @@

|

||||||

import hashlib

|

|

||||||

import json

|

import json

|

||||||

from typing import Any, Dict, List, Optional, Set, Tuple

|

from typing import Any, Dict, List, Optional, Set, Tuple

|

||||||

from urllib.parse import urlparse

|

from urllib.parse import urlparse

|

||||||

|

|

@ -31,15 +30,15 @@ class GiteaProvider(GitProvider):

|

||||||

self.pr_url = ""

|

self.pr_url = ""

|

||||||

self.issue_url = ""

|

self.issue_url = ""

|

||||||

|

|

||||||

gitea_access_token = get_settings().get("GITEA.PERSONAL_ACCESS_TOKEN", None)

|

self.gitea_access_token = get_settings().get("GITEA.PERSONAL_ACCESS_TOKEN", None)

|

||||||

if not gitea_access_token:

|

if not self.gitea_access_token:

|

||||||

self.logger.error("Gitea access token not found in settings.")

|